Multimodal Biometric Template Protection

Project Team: Prof. YUEN, Pong Chi

|

|

Project Goal |

|

Multimodal biometric recognition offers higher performance accuracy than uni-modal recognition due to higher number of distinctive biometric features that can be leveraged in constructing a more discriminative feature-set representation for recognition. As a result, multimodal template protection appears to be more crucial as the consequence of compromise of multi-modal template will be far more devastating than that of the uni-modal template (i.e., these compromised templates prohibit the use of such biometric modalities in biometric recognition applications). To alleviate this threat, the project goal is to design, analyze, and develop a multimodal biometric template protection scheme for optimal preservation of discrimination power of biometric representation, user security and privacy.

|

Project Description |

|

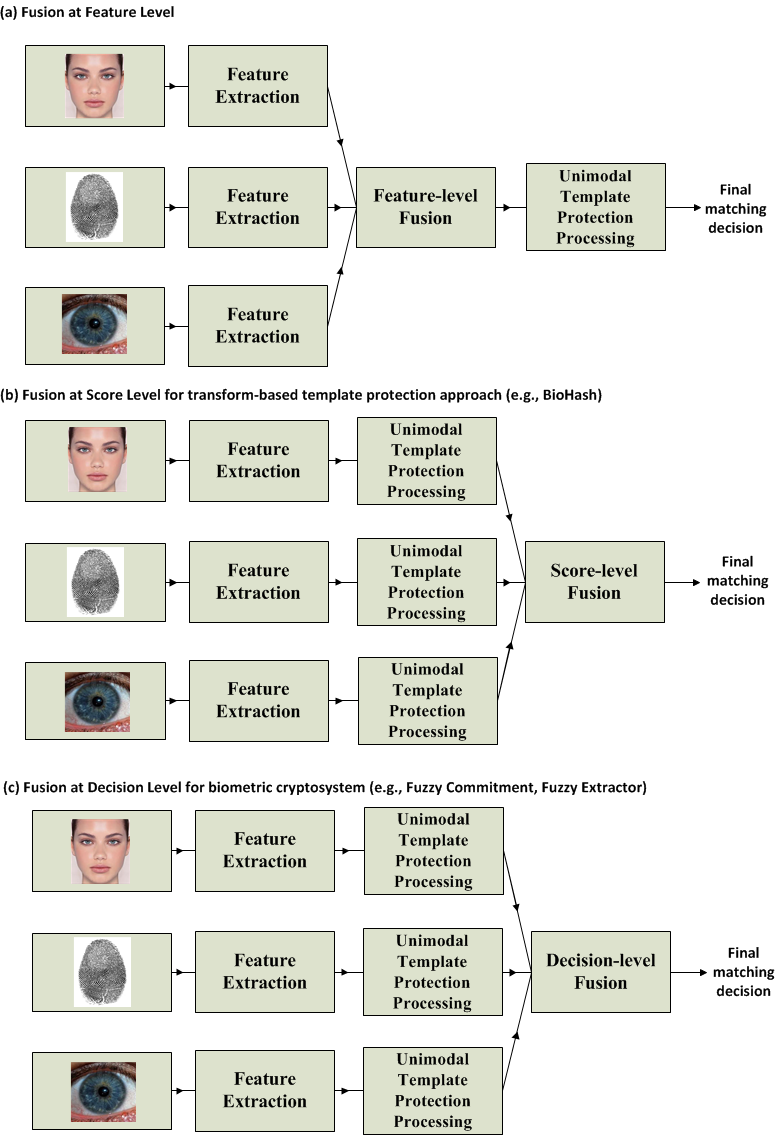

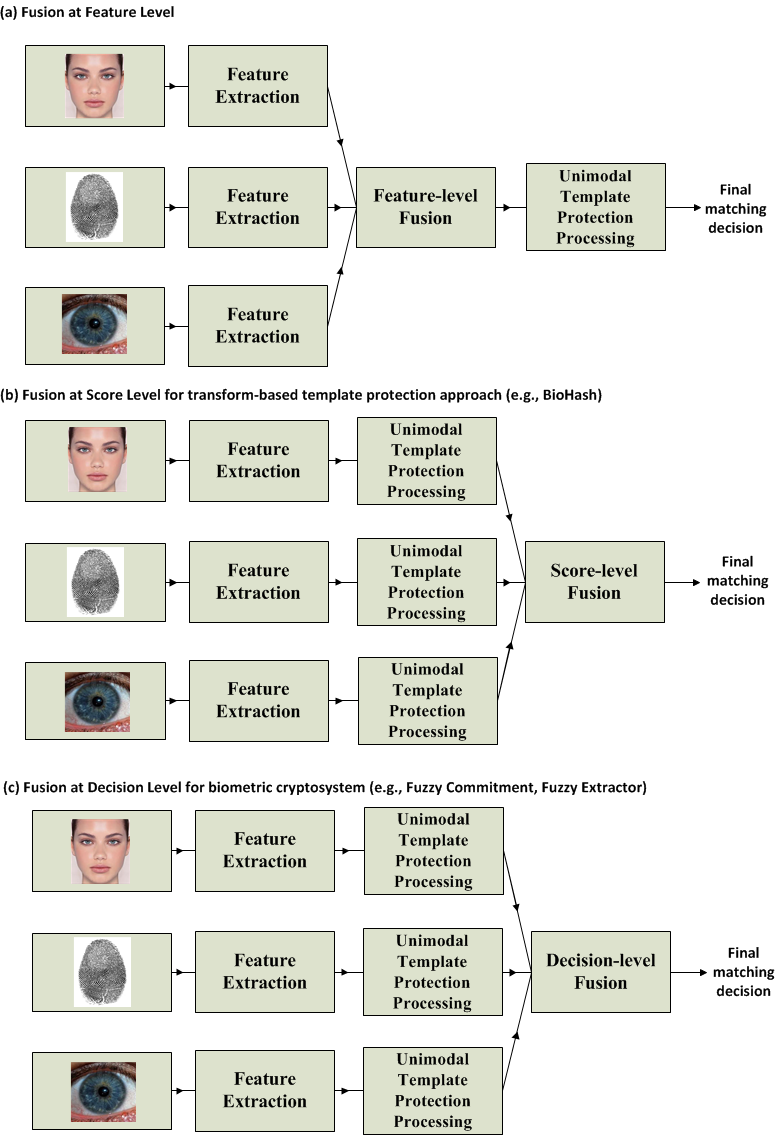

The design of multimodal template protection can be influenced by the level at which fusion of multiple types of uni-modal biometric data is applied. If the fusion is applied at the feature level, the fused features can be treated as if it comes from a uni-modal biometric source. As such, a secure uni-modal template protection scheme can be directly applied. If the fusion is applied at the score level, it would be necessary to apply a uni-modal template protection scheme to every individual set of extracted features, provided that the output of the uni-modal template protection scheme is in the form of matching score. Feasible template protection schemes in this aspect include the transform-based protection schemes: Teoh et al.’s BioHash, Ratha et al.’s non-invertible transform and etc. Alternatively, the fusion can be applied at the decision level, where a uni-modal template protection scheme is applied to every individual set of extracted features to yield a decision per modality. In this case, both transform-based template protection schemes and biometric cryptosystems (i.e., fuzzy commitment, fuzzy extractor, etc.) can be applied.

|

Figure 1. Multimodal Template Protection with fusion applied at (a) feature; (b) score; and (c) decision levels.

|

|

Suppose that we are given n different biometric templates of k representation types generated by t feature extractors. In this project, we aim to tackle several research issues. The first two issues are concerned with feature-level fusion, while the last issue targets all fusion levels.

|

Template alignment

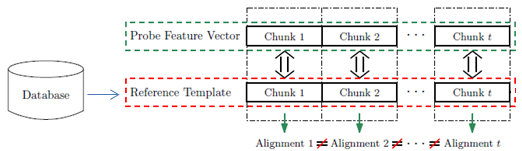

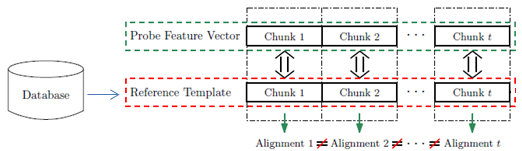

In feature-level fusion, t chunks of extracted biometric features are concatenated (considering the simplest case) to form a fused feature vector. While aligning probe biometrics such as iris (with respect to the reference template) within a template protection scheme is still feasible in the uni-modal setting (e.g., applying decommitments at various shifting positions in an iris biometric fuzzy commitment scheme), handling such alignment problem in a multi-modal setting can be problematic when t chunks of extracted features with different alignments are fused into a single template. While it is unlikely to obtain a common optimal alignment for all chunks of extracted features, recognition performance of the fused feature can be significantly affected without proper alignment of each individual chunk of data. This poses a great need for an align-invariant uni-modal and multi-modal biometric cryptosystems and biometric template protection schemes.

Figure 2. Template alignment within a multi-biometric template protection scheme.

A unified discriminative feature representation

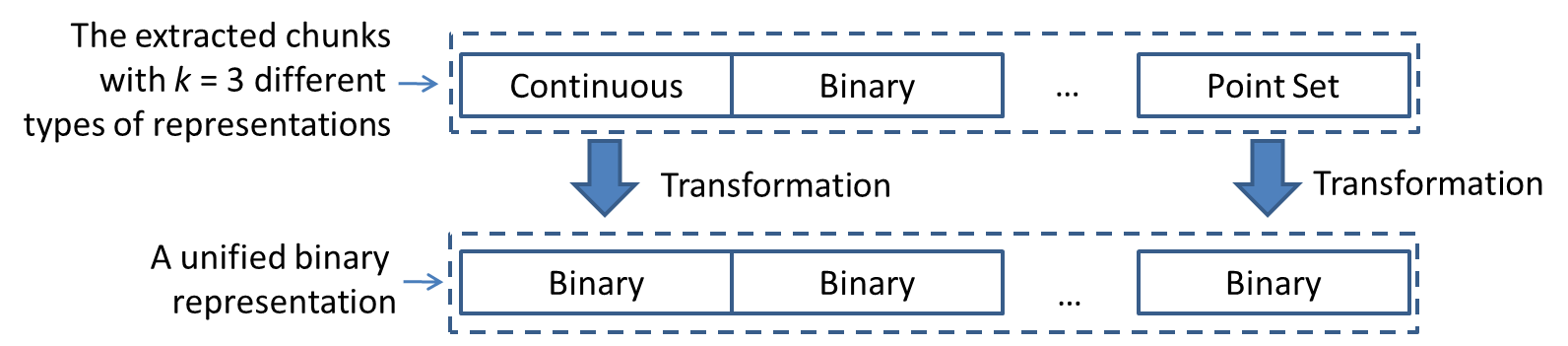

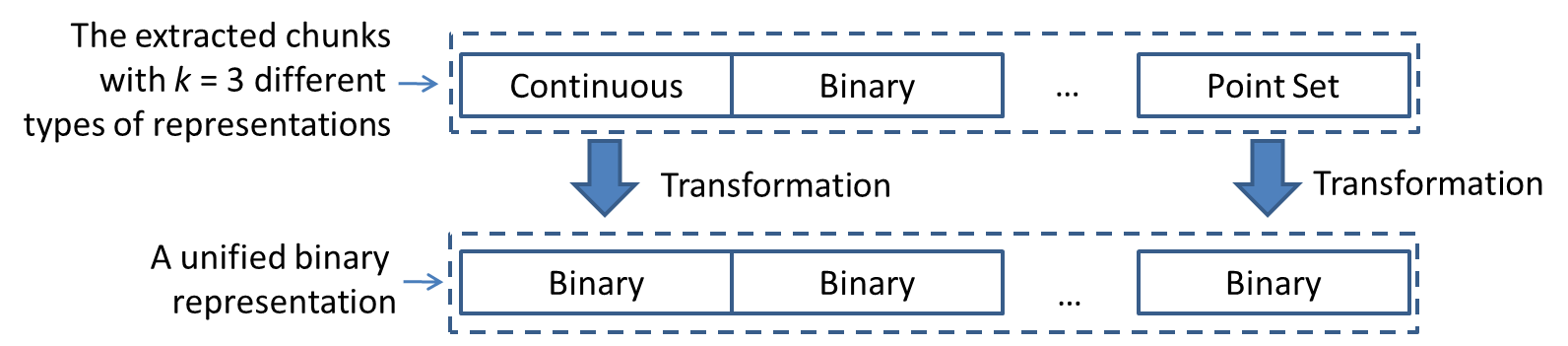

In feature-level fusion, k types of feature representation (e.g., binary, discrete, continuous, etc.) can be obtained, depending on the type of output of the feature extractors. In order to apply a common uni-modal template protection scheme to protect the fused template, it is necessary to derive a common representation for all k types of feature representation. For example, minutiae triplets are transformed into the same type of representation as the binary iris code so that a uni-modal fuzzy commitment or fuzzy extractor can be applied. A requirement is that the transformation function must not cause a drastic loss of information, which may jeopardize the recognition performance. Another issue is on the tradeoff between feature discriminability and system entropy. As false rejection is lower bounded by error correction capacities, unbounded use of error correction to increase genuine acceptance may make systems vulnerable to passive impersonation attacks due to unacceptably high false acceptance. Hence, there is an inevitable tradeoff between performance accuracy and system security. To date, we are still in quest of a good tradeoff between these two factors for a multimodal biometric system. More investigations are currently carried out along these directions.

Figure 3. A toy example of transforming extracted chunks with 3 different representation types into a unified binary feature representation.

Multimodal system security

Since analysis concerning irreversibility and non-linkability of a multimodal biometric system is rarely done, there is a need to reformulate and extend corresponding security measures, considering that separate storage of protected templates may take influence on system security. In this project, we are working towards establishing a generic method of operation to estimate the security of multi-biometric template protection scheme in an information-theoretic way.

|

|

For further information on this project, please contact Prof. YUEN, Pong Chi.

|