|

Learning Dynamic Patient Representations from Multi-Model Electronic Health Record Data Using a Deep Tensor Factorization Approach (William K. Cheung, et al.)

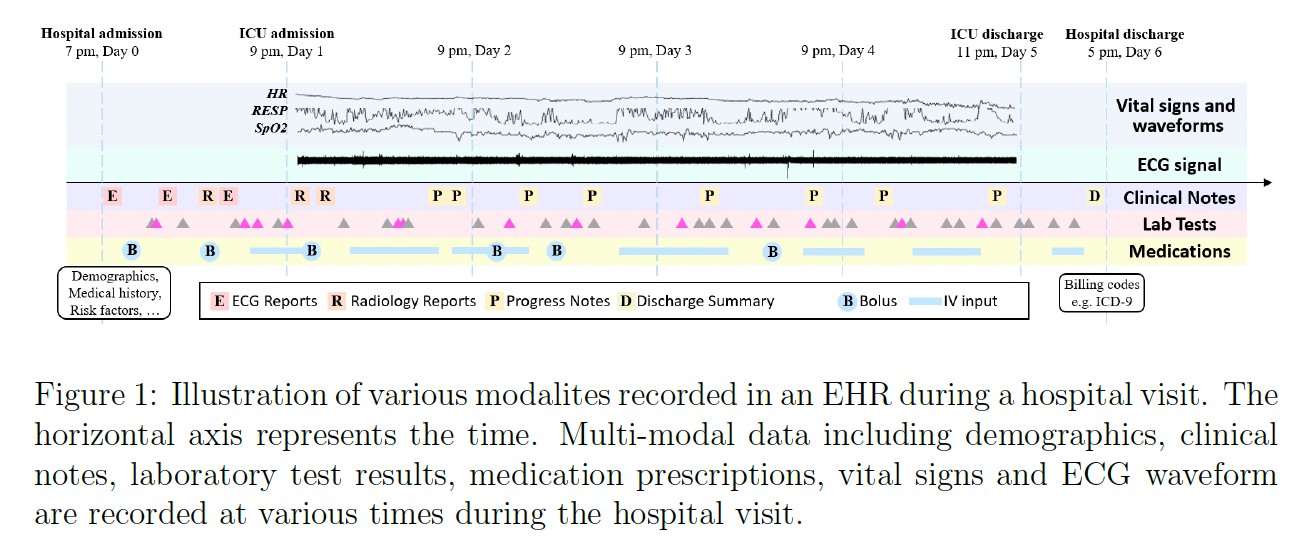

Leveraging electronic health records (EHR) data for healthcare predictive analytics has been receiving growing attention in recent years. High-throughput phenotyping is one of the analytics task where machine learning algorithms are used to derive phenotypes (sets of clinical conditions) from the EHR data to characterize patients of different diseases. Most of the existing phenotyping algorithms consider only structured information like diagnoses and medications. For better patient characterization, multiple data modalities like lab test results, progress notes, vital signs, etc. should all be considered. Fusing the multi-modal EHR data is non-trivial as they are of different types, recorded at different time scales, and related in a complex manner.

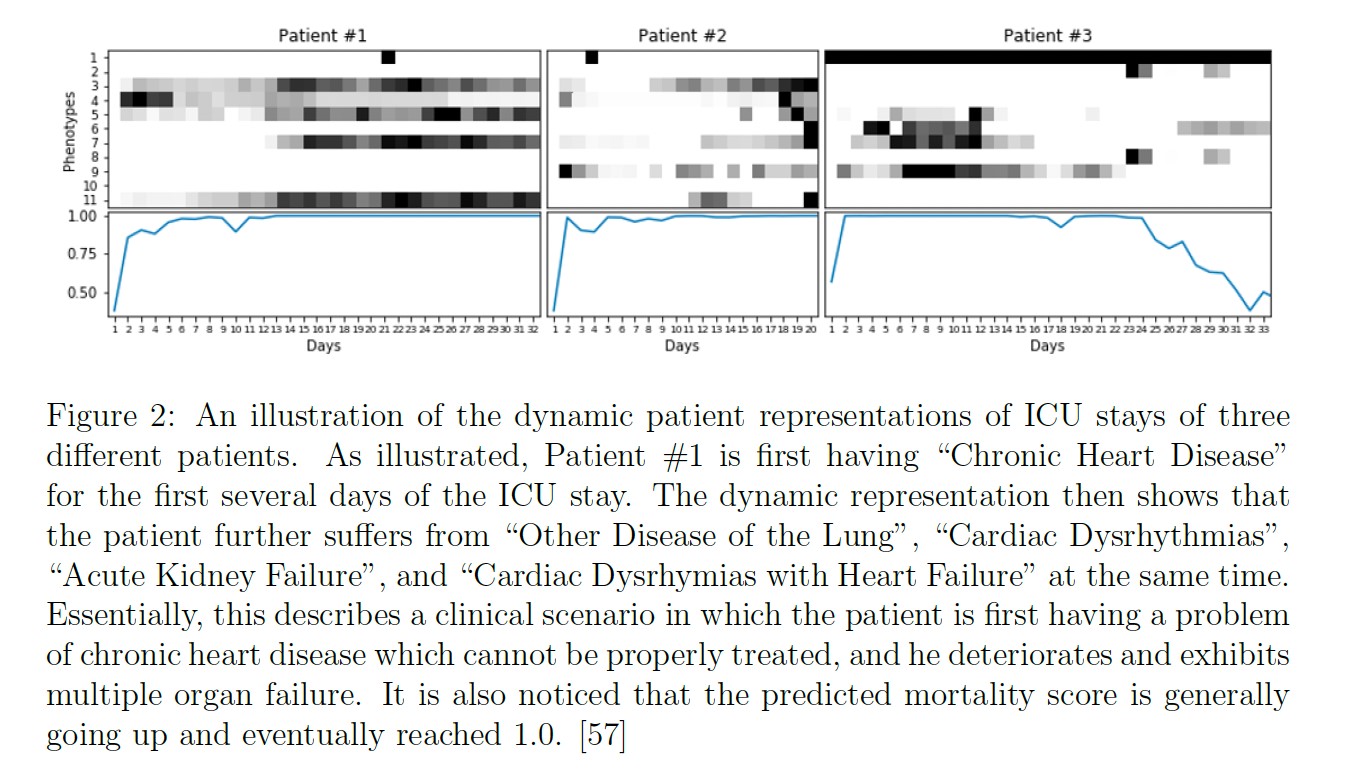

In this project, we propose a deep tensor factorization framework for inferring highly interpretable phenotypes and dynamic patient representations from multi-modal EHR data. In particular, the framework contains a temporal tensor model as its core for capturing (a) the interaction of the structured information (like diagnosis, medication, and lab tests), (b) the underlying phenotypes (as tensor factors), and (c) the temporal evolution of the phenotype portion (dynamic representation), as part of the model learning. As the temporal evolution of the health condition of a patient is complex in nature, deep models like recurrent neural network and neural Hawkes process can be integrated for regularizing the dynamic representations. In addition, the proposed framework can be integrated with a deep network architecture to learn to extract features from physiological time series like vital signs and ECG waveforms so that the associated predictive analytics tasks can be carried out in a patient-specific manner.

Grant Support:

The project is supported by Research Grant Council under the General Research Fund HKBU 12201219.

For further information on this research topic, please contact Prof. William K. Cheung.

|