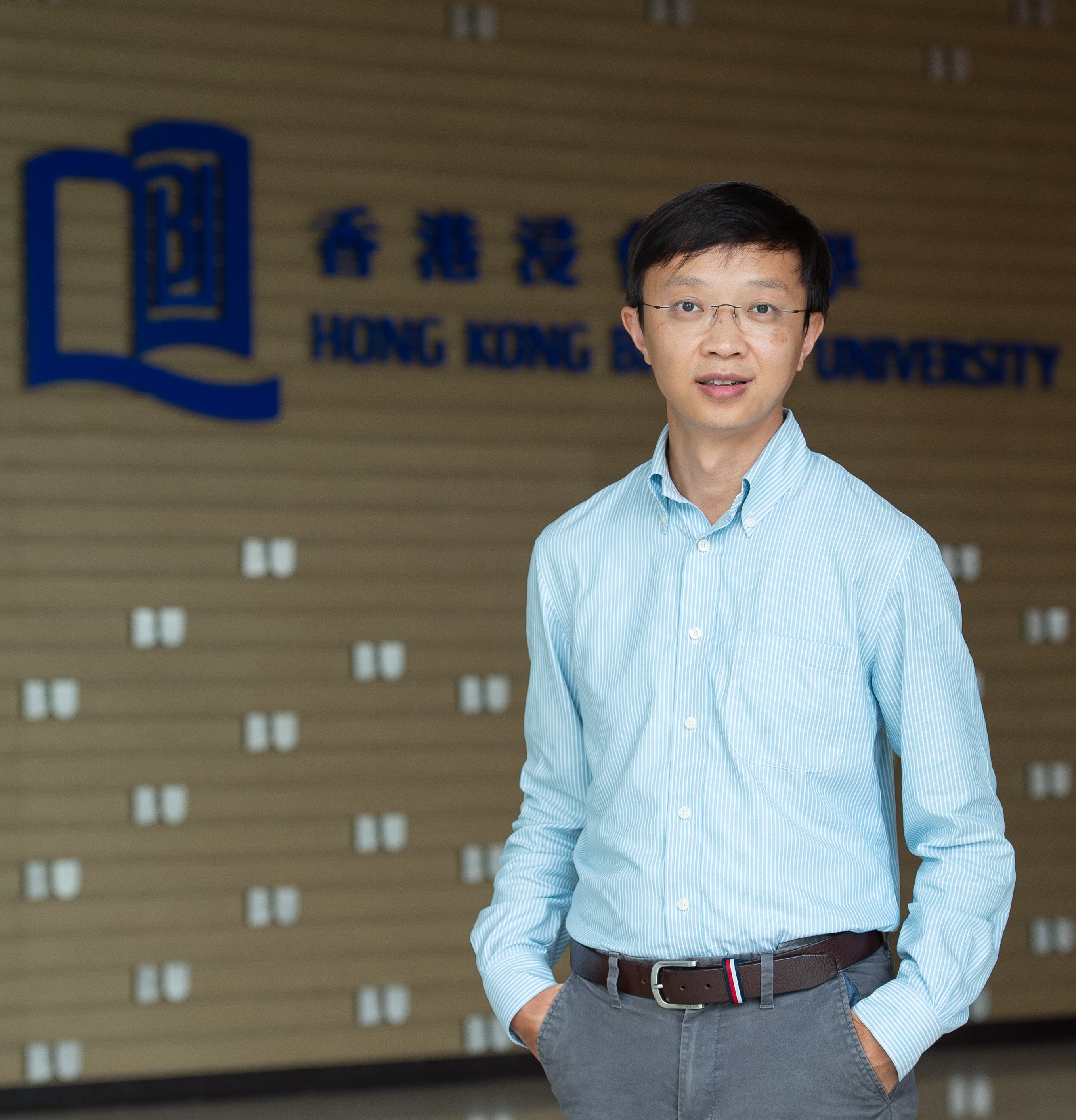

Associate Professor

Hong Kong Baptist University, Department of Computer Science

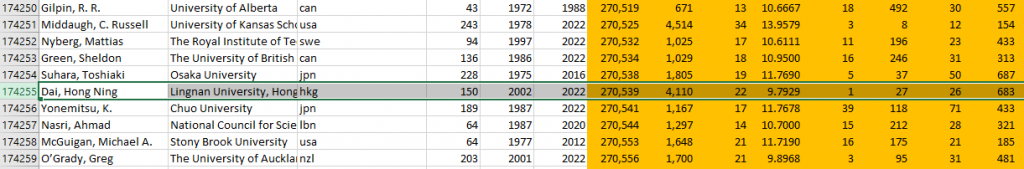

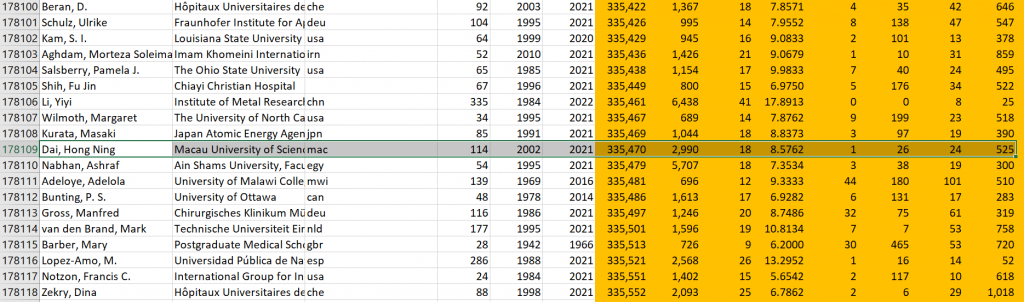

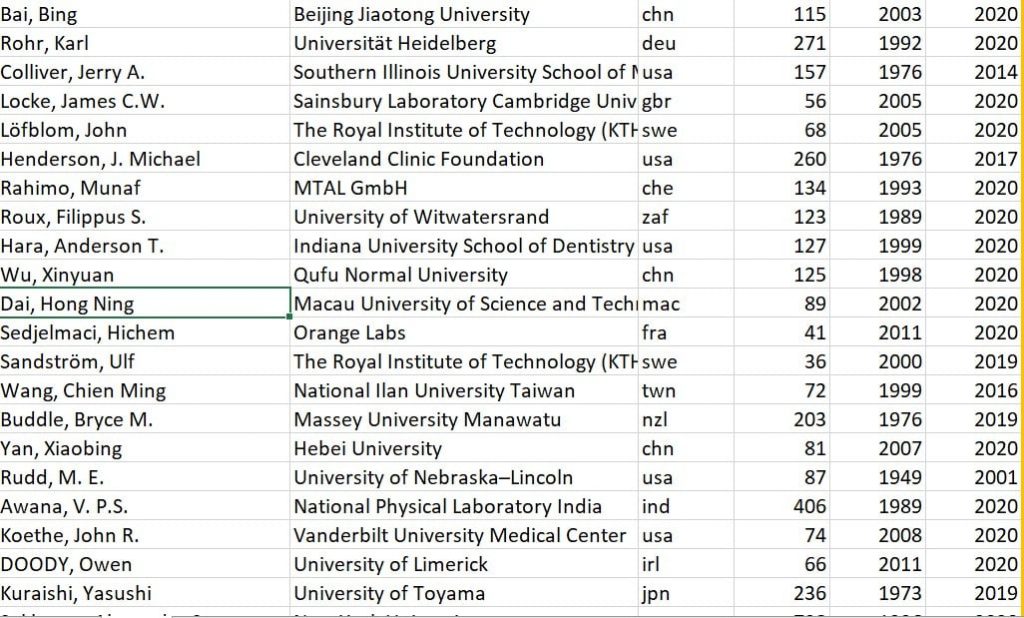

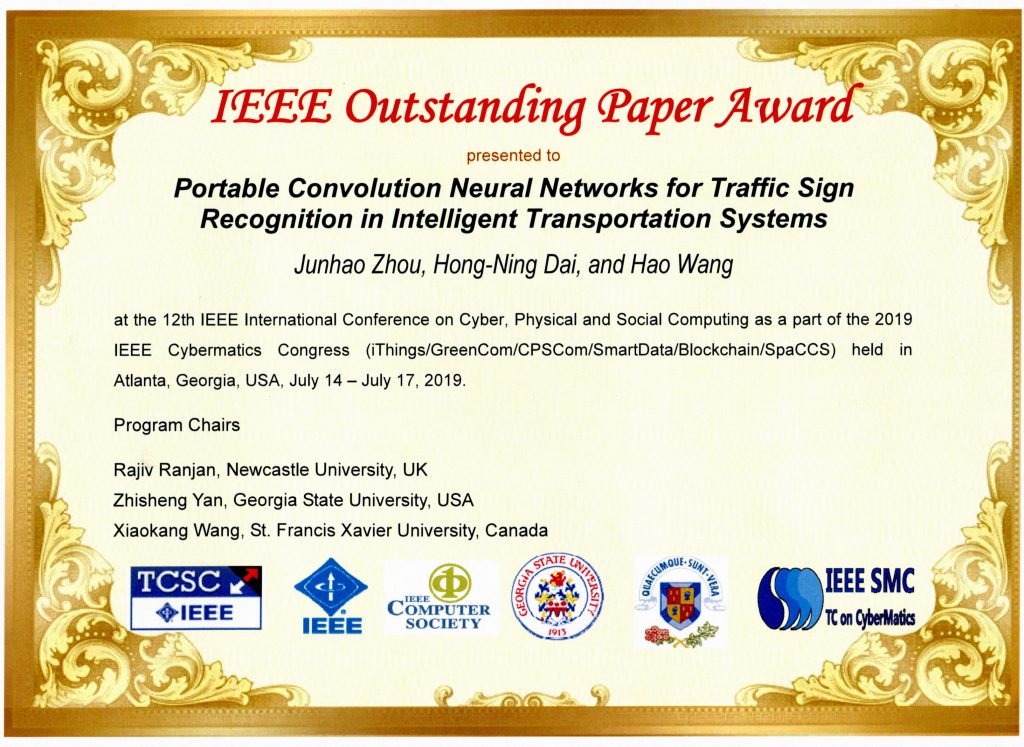

Henry Hong-Ning Dai is now with the Department of Computer Science, Hong Kong Baptist University as an associate professor. He obtained a Ph.D. in Computer Science and Engineering from the Department of Computer Science and Engineering at the Chinese University of Hong Kong and a D.Eng. in Computer Technology Application from the Department of Computer Science and Engineering at Shanghai Jiao Tong University. Before joining Hong Kong Baptist University, he was with the School of Computer Science and Engineering at Macau University of Science and Technology as an assistant professor/associate professor from 2010 to 2021, and the Department of Computing and Decision Sciences, Lingnan University, Hong Kong as an associate professor from 2021 to 2022. He has published more than 300 papers in referred journals and conferences, including Proceedings of the IEEE, IEEE Journal on Selected Areas in Communications (JSAC), IEEE Transactions on Mobile Computing (TMC), ACM Transactions on Software Engineering and Methodology (TOSEM) IEEE Transactions on Parallel and Distributed Systems (TPDS), IEEE Transactions on Computer (TC), IEEE Transactions on Knowledge and Data Engineering (TKDE), IEEE Transactions on Wireless Communications, the IEEE/ACM International Conference on Software Engineering (ICSE), the ACM/IEEE International Conference on Automated Software Engineering (ASE), IEEE Conference on Computer Communications (INFOCOM), IEEE International Conference on Data Engineering (ICDE), AAAI Conference on Artificial Intelligence (AAAI), The conference on Information and Knowledge Management (CIKM), ACM Symposium on Cloud Computing (SoCC), IEEE Transactions on Neural Networks and Learning Systems (TNNLS), ACM Computing Surveys (CSUR), IEEE Communications Surveys & Tutorials, etc. His publications have received more than 24,000 citations. He has also led over 12 research projects as Principle Investigator (PI) or Co-PI with totally HK$ 16M. He has won more than 17 awards. He is also the holder of 1 U.S. patent and 1 Australia innovation patent. He is a senior member of ACM, IEEE, and EAI. He has served as associate editors/editors for IEEE Communications Surveys & Tutorials, IEEE Transactions on Intelligent Transportation Systems, IEEE Transactions on Industrial Informatics, IEEE Transactions on Industrial Cyber-Physical Systems, Ad Hoc Networks (Elsevier), and Connection Science (Taylor & Francis). He has served as a PC member of top-tier conferences, including WWW'24 (The Web Conference), SDM'24, ASE'23, KDD'23, CIKM'19, ICPADS'19. He has also served as general chair/PC chair of many international conferences.

His other profiles and CV:Hong Kong Baptist University, Department of Computer Science

Lingnan University, Department of Computing and Decision Sciences

Macau University of Science and Technology, Faculty of Information Technology

Macau University of Science and Technology, Faculty of Information Technology

Chinese University of Hong Kong, Department of Information Engineering

Ph.D. in Computer Science and Engineering

Chinese University of Hong Kong

DEng. in Computer Science and Engineering

Shanghai Jiao Tong University

MEng. in Computer Science and Engineering

South China University of Technology

BEng. in Computer Science and Engineering

South China University of Technology

Henry Hong-Ning Dai has been Principle Investigators (PI) and Co-PIs of over 10 research projects with totally HK$ 16M.

| Role | Grant Source | Project Title | Reference No. | During | Amount (HKD) |

|---|---|---|---|---|---|

| PI | Seed Funding for Collaborative Research Grants | iSurveillance: Towards Cyber-Physical Secure Factory in Industry 4.0 | RC-SFCRG/23-24/R2/SCI/06 | 2025 - 2028 | 1,047,750 |

| Co-PI | FDS, UGC, Hong Kong | Space-Air-Ground Integrated Networks: System Modeling and Performance Optimization | FDS16/E15/24 | 2025 - 2027 | 776,810 |

| Co-PI | BNBU-HKBU Cross-Campus "1+1+1" Research Collaboration Scheme | Optimizing and Accelerating Graph Neural Networks for Large-Scale Irregular IoT Sensor Data | NA | 2025 - 2027 | 2,150,668 |

| PI | Start-up Grant for New Academics | Towards Scalable, Privacy-Preserving, and Secure Social Metaverse | SCI-162832 | 2023 - 2024 | 200,000 |

| Co-PI | FDS, UGC, Hong Kong | A Study of Distributed Service-aware Wireless Cellular Networks (DS-WCNs): From User Demand Modeling to Performance Optimization | UGC/FDS16/E02/22 | 2022 - 2025 | 1,047,750 |

| PI | COMP Department Start-up Fund | Complex Network-based Data Analytics of Heterogeneous Blockchain Data | COMP-179432 | 2022 - 2023 | 300,000 |

| Co-PI | NSFC, China | Studies of Key Technologies on Collaborative Cloud-Device-Edge in Sensor Cloud Systems | 62172046 | 2021 - 2024 | 748,684 (RMB 590,000) |

| Sub-project leader | Macao Key R & D Projects of FDCT, Macau | STEP Perpetual Learning based Collective Intelligence: Theories and Methodologies | 0025/2019/AKP | 2020 - 2023 | 6,000,000.00 |

| PI | FDCT, Macau | Key Technologies to Enable Ultra Dense Wireless Networks | 0026/2018/A1 | 2018 - 2021 | 1,000,000.00 |

| PI | FDCT, Macau | Large Scale Wireless Ad Hoc Networks: Performance Analysis and Performance Improvement | 096/2013/A3 | 2014 - 2017 | 1,356,100.00 |

| Co-PI | NSFC, China | Studies on Network Resilience in Self-Organized Intelligent Manufacturing Internet of Things | 61672170 | 2017 - 2020 | 799,442 (RMB 630,000) |

| PI | FDCT, Macau | Studies on Multi-channel Networks using Directional Antennas | 036/2011/A | 2012 - 2014 | 495,900.00 |

| Co-PI | FDCT, Macau | Idle Sense Scheme in Non-saturated Wireless LANs | 081/2012/A3 | 2013 - 2016 | 1,102,200.00 |

| Total awarded research grants as PI/Co-PI (2012 - present) | Total | 16,961,338 | |||

Copyright and all rights therein are retained by the authors or by the respective copyright holders (including Springer Verlag, Elsevier, ACM, IEEE Press, Wiley, etc.). You can download them only if you follow their restrictions (e.g., for private use or academic use). The online version may be slightly different from the final version. They are put online just for the convenience of academic sharing.

You may refer to Henry Dai's personal web or DBLP or Google Scholar for more comprehensive publications.

Although diverse augmented reality (AR) and virtual reality (VR) systems have garnered extensive attention from both industry and academia, they have also raised increasing security and privacy concerns. AR/VR devices, equipped with various sensors, can continuously collect sensitive human data and track users, making them targets for malicious attacks. To better understand the threats posed by the acoustic channel in AR/VR systems, we investigate a novel concealed side-channel attack that utilizes inaudible acoustic signals transmitted and detected by commercial off-the-shelf VR headsets or mobile phones. From the received variations in the acoustic channel caused by a VR victim's hand movements, unique features can be extracted to reconstruct the input contents (e.g., passwords). We refer to this attack system as AcouListener, implemented as a camouflaged mobile app that can be installed on either an AR/VR or a mobile platform. We conduct extensive experiments targeting three common VR attack scenarios: (1) inferring victims' unlocking patterns, (2) inferring victims' handwriting patterns, and (3) inferring victims' typing words and passwords in virtual keyboards. Experimental results show that AcouListener achieves an average F1-score of 84\% in unlocking pattern recognition, 95\% in handwriting recognition, and 80\% in typing recognition. Furthermore, we present corresponding countermeasures against this inaudible acoustic side-channel attack.

Time series anomaly detection has garnered significant research attention due to growing demands for temporal data monitoring across diverse domains. Despite the rapid advent of unsupervised anomaly detection models, existing approaches face two critical challenges in understanding the mechanisms of reconstruction-based models when handling diverse temporal dependencies: (1) the insufficient exploration of complex inter-timestamp relationships encompassing both long-term and short-term dependencies, and (2) the lack of integrated frameworks for jointly learning localized patterns and global temporal characteristics. To address these challenges, we propose the novel Multi-Scale Hypergraph Transformer (MSHTrans), which leverages the capacity of hypergraphs for modeling multi-order temporal dependencies. Particularly, our method employs multi-scale downsampling to derive complementary fine-grained and coarse-grained representations, integrated with trainable hypergraph neural networks that can adaptively learn inter-timestamp relationships. The framework further integrates time series decomposition to systematically extract periodic and trend components from multi-granular features, thereby enhancing long-term dependency modeling. Through synergistic integration of learned local patterns and global temporal structures, the model achieves comprehensive time series reconstruction for effective anomaly detection. Extensive experiments demonstrate that MSHTrans outperforms state-of-the-art competitors with an average performance improvement of 8.21% (without point adjustment) and 3.52% (with point adjustment).

A cross-shard transaction (CTX) is parsed into two sub-transactions, which are then executed in the source and destination shards, respectively. However, the problem is that the client who submits the original transaction only pays one unit of the transaction fee. Thus, sub-transactions will experience much higher queueing delays than regular intra-shard transactions when they wait in shard transaction pools. This is unfair for those original transactions that will be parsed into sub-transactions from the perspective of a sharded blockchain. Therefore, how to ensure fairness for all CTXs while securing the atomicity of any pair of sub-transactions becomes a critical challenge. State-of-the-art solutions addressed the transaction atomicity challenge, but the literature still lacks a dedicated incentive mechanism to ensure the fairness of CTXs. To this end, we propose an incentive mechanism named Justitia, which aims to achieve fairness by motivating blockchain proposers to prioritize the CTXs queueing in transaction pools when they package transactions to generate a new block. We rigorously analyze that Justitia upholds the fundamental properties of a sharded blockchain, including security, atomicity, and fairness. We then implement a prototype of Justitia on an open-source sharding-enabled blockchain testbed. Our experiments using historical Ethereum transactions demonstrate that i) Justitia guarantees fairness while processing CTXs, ii) its token-issuance mechanism does not lead to unstable economic inflation, and iii) Justitia only yields 20%-80% of queueing latency for CTXs upon comparing with Monoxide protocol.

The problem of federated learning (FL) where users are distributed and partitioned into clusters has been addressed through the framework of clustered federated learning (CFL). However, when users are unwilling to share their cluster identities due to privacy concerns, CFL’s training becomes difficult. To address these issues, we introduce an innovative Efficient and Robust Secure Aggregation scheme for CFL, dubbed EBS-CFL. The proposed method supports training CFL while maintaining user cluster identity confidentiality. It detects potential poisoning attacks without compromising individual client gradients by discarding negatively correlated gradients and aggregating positively correlated ones using a weighted approach. The server also authenticates correct gradient encoding by clients. Client-side overhead is (O(ml + m^2)) for communication and (O(m^2l)) for computation. When (m = 1), computational efficiency is at least (\log{n}) times better than other methods, where (n) is the number of clients, (m) is the number of cluster identities, and (l) is the gradient size. Our method's theoretical efficiency and security are validated through comprehensive analysis and extensive experiments.

Graph Neural Networks (GNNs) have exhibited remarkable capabilities for dealing with graph-structured data. However, recent studies have revealed their fragility to adversarial attacks, where imperceptible perturbations to the graph structure can easily mislead predictions. To enhance adversarial robustness against attacks, some methods attempt to learn robust representation through improving GNN architectures. Subsequently, another approach suggests that these GNNs might taint feature information and have poor classifier performance, leading to the introduction of Graph Contrastive Learning (GCL) methods to build a refining-classifying pipeline. However, existing methods focus on global-local contrastive strategies, which fail to address the robustness issues inherent in the contexts of adversarial robustness. To address these challenges, we propose a novel paradigm named MIRACLE to enhance the robustness of learned representations by shifting the focus to local neighborhoods. Specifically, the dual neighborhood contrastive learning strategy is designed to extract local topological and semantic information. Paired with a neighbor estimator, the strategy can learn robust representations that are resilient to adversarial edges. Additionally, we also provide an improved GNN classifier to further mine the learned representation Theoretical analyses provide a stricter lower bound of mutual information, ensuring the convergence of MIRACLE. Extensive experiments validate the effectiveness of MIRACLE compared to state-of-the-art baselines against various adversarial attacks.

Virtual Reality (VR) has accelerated its prevalent adoption in emerging metaverse applications, but it is not a fundamentally new technology. On the one hand, most VR operating systems (OS) are based on off-the-shelf mobile OS (e.g., Android OS). As a result, VR apps also inevitably inherit privacy and security deficiencies from conventional mobile apps. On the other hand, in contrast to traditional mobile apps, VR apps can achieve an immersive experience via diverse VR devices, such as head-mounted displays, body sensors, and controllers. However, achieving this requires the extensive collection of privacy-sensitive human biometrics (e.g., hand-tracking and face-tracking data). Moreover, VR apps have been typically implemented by 3D gaming engines (e.g., Unity), which also contain intrinsic security vulnerabilities. Inappropriate use of these technologies may incur privacy leaks and security vulnerabilities although these issues have not received significant attention compared to the proliferation of diverse VR apps. In this paper, we develop a security and privacy assessment tool, namely the VR-SP detector for VR apps. The VR-SP detector has integrated program static analysis tools and privacy-policy analysis methods. Using the VR-SP detector, we conduct a comprehensive empirical study on 900 popular VR apps. We obtain the original apps from the popular SideQuest app store and extract Android PacKage (APK) files via the Meta Quest 2 device. We evaluate the security vulnerabilities and privacy data leaks of these VR apps through VR app analysis, taint analysis, privacy policy analysis, and user review analysis. We find that a number of security vulnerabilities and privacy leaks widely exist in VR apps. Moreover, our results also reveal conflicting representations in the privacy policies of these apps and inconsistencies of the actual data collection with the privacy-policy statements of the apps. Further, user reviews also indicate their privacy concerns about relevant biometric data. Based on these findings, we make suggestions for the future development of VR apps.

User authentication is evolving with expanded applications and innovative techniques. New authentication approaches utilize RF signals to sense specific human characteristics, offering a contactless and nonintrusive solution. However, these RF signal-based methods struggle with challenges in open-world scenarios, i.e., dynamic environments, daily behaviors with unrestricted postures, and identification of unauthorized users with security threats. In this paper, we present an open-world user authentication system, OpenAuth, which leverages a commercial off-the-shelf (COTS) mmWave radar to sense unrestricted human postures and behaviors for identifying individuals. First, OpenAuth utilizes a MUSIC-based neural network imaging model to eliminate environmental clutter and generate environment-independent human silhouette images. Then, the human silhouette images are normalized to consistent topological structures of human postures, ensuring robustness against unrestricted human postures. Next, fine-grained body features are extracted from these environment-independent and posture-independent human silhouette images using a metric learning model. To eliminate potential security threats that arise from unauthorized users, OpenAuth synthesizes data placeholders for enhancing unauthorized user identification. Finally, a k-NN-based authentication model is constructed to authenticate users' identities. Experiments in real environments show that the proposed OpenAuth achieves an average authentication accuracy of 93.4% and false acceptance rate (FAR) of 1.8% in open-world scenarios.

Federated learning provides a privacy-preserving modeling schema for distributed data, which coordinates multiple clients to collaboratively train a global model. However, data stored in different clients may be collected from diverse domains, and the resulting feature shift is prone to the degraded performance of global model. In this paper, we propose a Federated Domain-Independent Prototype Learning (FedDP) method with Alignments of Representation and Parameter Spaces for Feature Shift. Concretely, FedDP aims to eliminate the domain-specific information and explore the pure representations via information bottleneck, thus integrating the local and global domain-independent prototypes, respectively. To align the cross-domain representation spaces, the global domain-independent prototypes serve as the supervised signals to enable local intra-class representations to approach them. Further, to mitigate the divergences of optimization directions between multiple clients induced by the feature shift, the global representations are yielded by the global model on the client-side and guide the learning of local representations, thus unifying the parameter spaces of multiple local models. We derive the theoretical lower bound of the optimization objective based on mutual information, which is transformed into a computable loss. The proposed FedDP can be applied in the scenarios of homogeneous and heterogeneous models. Extensive experiments are conducted on three challenging multi-domain datasets. The experimental results illustrate the superiority of FedDP compared with state-of-the-art federated learning methods.

Inadequate resource coordination and control can result in poor quality of experience (QoE) for user devices in heterogeneous edge-enabled cyber-physical systems. Unfortunately, in a cooperative edge network, existing studies have rarely jointly optimized communication, computing resources, and batch size for QoE guarantee when controlling task offloading. To this end, we investigate the problem of harnessing bandwidth, computation, and batch size for fair quality of experience (HARBOR) in a practical collaborative edge-AI environment, where UEs have different accuracy requirements of inference services and edge devices possess different batch processing capabilities. Specifically, we introduce the task completion efficiency as the task-completion-time-to-deadline ratio to quantify individual QoE. Then, we formulate problem HARBOR as a mixed integer nonlinear programming with constraints of accuracy, bandwidth, computation, task hard deadlines and so on. The objective is to minimize the maximum task completion efficiency among all tasks to achieve task-level fairness. After providing the NP hardness proof for HARBOR, we then devise an efficient scheme named e-HARBOR with competitive ratio guarantee, to solve the decoupled sub-problems of HARBOR with calibrated long shortterm memory network for resource prediction. Both testbed and simulation experiments evidently demonstrate that the proposed scheme works efficiently and scales well compared to baselines.

The spotlight on unmanned aerial vehicles (UAVs) is to enhance wireless communications while ignoring the potential risk of UAVs acting as adversaries. Due to their mobility and flexibility, UAV eavesdroppers pose an immeasurable threat to legitimate wireless transmissions. However, the existing fixed jamming scheme without cooperation cannot counter the flexible and dynamic UAV eavesdropping. In this article, a cooperative intelligent jamming scheme is proposed, authorizing ground jammers (GJs) to interfere with UAV eavesdroppers, generating specific jamming shields between UAV eavesdroppers and legitimate users. Toward this end, we formulate a secrecy capacity maximization problem and model the problem as a decentralized partially observable Markov decision process (Dec-POMDP). To address the challenge of the huge state space and action space with network dynamics, we leverage a deep reinforcement learning (DRL) algorithm with a dueling network and double-Q learning (i.e., dueling double deep Q-network) to train policy networks. Then, we propose a multi-agent mixing network framework (QMIX)-based collaborative jamming algorithm to enable GJs to independently make decisions without sharing local information. Additionally, we perform extensive simulations to validate the superiority of our proposed scheme and present useful insights into practical implementation by elucidating the relationship between the deployment settings of GJs and the instantaneous secrecy capacity.

With the development of the theory and technology of computer science, machine or computer painting is increasingly being explored in the creation of art. Machine-made works are referred to as artificial intelligence (AI) artworks. Early methods of AI artwork generation have been classified as non-photorealistic rendering (NPR) and, latterly, neural-style transfer methods have also been investigated. As technology advances, the variety of machine-generated artworks and the methods used to create them have proliferated. However, there is no unified and comprehensive system to classify and evaluate these works. To date, no work has generalised methods of creating AI artwork including learning-based methods for painting or drawing. Moreover, the taxonomy, evaluation and development of AI artwork methods face many challenges. This paper is motivated by these considerations. We first investigate current learning-based methods for making AI artworks and classify the methods according to art styles. Furthermore, we propose a consistent evaluation system for AI artworks and conduct a user study to evaluate the proposed system on different AI artworks. This evaluation system uses six criteria: beauty, color, texture, content detail, line and style. The user study demonstrates that the six-dimensional evaluation index is effective for different types of AI artworks.

This paper presents Chat with MES (CWM), an AI agent system, which integrates LLMs into the Manufacturing Execution System (MES), serving as the “ears, mouth, and the brain”. This system promotes a paradigm shift in MES interactions from Graphical User Interface (GUI) to natural language interface”, offering a more natural and efficient way for workers to manipulate the manufacturing system. Compared with the traditional GUI, both the maintenance costs for developers and the learning costs and the complexity of use for workers are significantly reduced. This paper also contributes two technical improvements to address the challenges of using LLM-Agent in serious manufacturing scenarios. The first one is Request Rewriting, designed to rephrase or automatically follow up on non-standardized and ambiguous requests from users. The second innovation is the Multi-Step Dynamic Operations Generation, which is a pre-execution planning technique similar to Chain-of-Thought (COT), used to enhance the success rate of handling complex tasks involving multiple operations. A case study conducted on a simulated garment MES with 55 manually designed requests demonstrates the high execution accuracy of CWM (80%) and the improvement achieved through query rewriting (9.1%) and Multi-Step Dynamic operations generation (18.2%). The source code of CWM, along with the simulated MES and benchmark requests, is publicly accessible.

User authentication is evolving with expanded ap- plication scenarios and innovative techniques. New authenti- cation approaches utilize RF signals to sense specific human behaviors and characteristics, such as faces, specific gestures, etc., offering a contactless and nonintrusive solution. However, these RF signal-based methods struggle with challenges in open- world scenarios, i.e., dynamic environments, daily behaviors with unrestricted postures, and identification of unauthorized users with security threats. In this paper, we present an open- world user authentication system, OpenAuth, which leverages a commercial off-the-shelf (COTS) mmWave radar to sense unrestricted human postures and behaviors for identifying indi- viduals. First, OpenAuth utilizes a MUSIC-based neural network imaging model to eliminate environmental clutter and generates environment-independent human silhouette images. Then, the human silhouette images are normalized to consistent topological structures of human postures, ensuring robustness against unre- stricted human postures. Based on the environment-independent and posture-independent human silhouette images, OpenAuth further extracts fine-grained body features through a metric learning model for user authentication. To eliminate potential security threats that arise from frequent accesses by unauthorized users, OpenAuth synthesizes data placeholders for enhancing the applicability of unauthorized user identification. Finally, a k-NN- based authentication model is constructed based on the extracted body features to authenticate users’ identities. Experiments in real environments show that the proposed OpenAuth achieves an average authentication accuracy of 93.4% and false acceptance rate (FAR) of 1.8% in open-world scenarios.

Although Virtual Reality (VR) has accelerated its prevalent adoption in emerging metaverse applications, it is not a fundamentally new technology. On one hand, most VR operating systems (OS) are based on off-the-shelf mobile OS (e.g., Android). As a result, VR apps also inherit privacy and security deficiencies from conventional mobile apps. On the other hand, in contrast to conventional mobile apps, VR apps can achieve immersive experience via diverse VR devices, such as head-mounted displays, body sensors, and controllers though achieving this requires the extensive collection of privacy-sensitive human biometrics (e.g., hand-tracking and face-tracking data). Moreover, VR apps have been typically implemented by 3D gaming engines (e.g., Unity), which also contain intrinsic security vulnerabilities. Inappropriate use of these technologies may incur privacy leaks and security vulnerabilities although these issues have not received significant attention compared to the proliferation of diverse VR apps. In this paper, we develop a security and privacy assessment tool, namely the VR-SP detector for VR apps. The VR-SP detector has integrated program static analysis tools and privacy-policy analysis methods. Using the VR-SP detector, we conduct a comprehensive empirical study on 500 popular VR apps. We obtain the original apps from the popular SideQuest app store and extract APK files via the Meta Oculus Quest 2 device. We evaluate security vulnerabilities and privacy data leaks of these VR apps by VR app analysis, taint analysis, and privacy-policy analysis. We find that a number of security vulnerabilities and privacy leaks widely exist in VR apps. Moreover, our results also reveal conflicting representations in the privacy policies of these apps and inconsistencies of the actual data collection with the privacy-policy statements of the apps. Based on these findings, we make suggestions for the future development of VR apps. This open-source tool is available at https://github.com/Henrykwokkk/Meta-detector.

As an essential component in Ethereum and other blockchains, token assets have been interacted with by diverse smart contracts although smart contracts are unprivileged by default. It is crucial for permission management of smart contracts to prevent token assets from being manipulated by unauthorized attackers. Despite recent efforts on investigating the accessibility of privileged functions or state variables in smart contracts to unauthorized users, little attention has been paid to how an accessible function can be manipulated by attackers to steal users’ digital assets. This attack is mainly caused by the permission re-delegation (PRD) vulnerability. In this work, we propose PrettySmart, a bytecode-level Permission redelegation vulnerability detector for Smart contracts. We first conduct an empirical study on 0.43 million open-source smart contracts and find that five types of widely-used permission constraints dominate 98% of studied contracts. We then propose a mechanism to infer these permission constraints adopted by smart contracts by exploiting the bytecode instruction sequences. We next devise an algorithm to identify permission constraints that can be bypassed by an initially unauthorized attacker. We evaluate PrettySmart on two real-world datasets of smart contracts, including those from reported vulnerabilities and a public real-world dataset of smart contracts. The experimental results demonstrate the effectiveness of the proposed PrettySmart for achieving the highest precision score and detecting 118 new PRD vulnerabilities. The PrettySmart is available at https://github.com/Z-Zhijie/PrettySmart.

Since the advent of decentralized financial applications based on blockchains, new attacks that take advantage of manipulating the order of transactions have emerged. To this end, order fairness protocols are devised to prevent such order manipulations. However, existing order fairness protocols adopt timeconsuming mechanisms that bring huge computation overheads and defer the finalization of transactions to the following rounds, eventually compromising system performance. In this work, we present Auncel, a novel consensus protocol that achieves both order fairness and high performance. Auncel leverages a weightbased strategy to order transactions, enabling all transactions in a block to be committed within one consensus round, without cost computation and further delays. Furthermore, Auncel achieves censorship resistance by integrating the consensus protocol with the fair ordering strategy, ensuring all transactions can be ordered fairly. To reduce the overheads introduced by the fair ordering strategy, we also design optimization mechanisms, including dynamic transaction compression and adjustable replica proposal strategy. We implement a prototype of Auncel based on HotStuff and construct extensive experiments. Experimental results show that Auncel can increase the throughput by 6x and reduce the confirmation latency by 3x compared with state-ofthe-art order fairness protocols.

Recently, stateless blockchains have been proposed to alleviate the storage overhead for nodes. A stateless blockchain achieves storage-consensus parallelism, where storage workloads are offloaded from on-chain consensus, enabling more resource-constraint nodes to participate in the consensus. However, existing stateless blockchains still suffer from limited throughput. In this paper, we present Porygon, a novel stateless blockchain with three-dimensional (3D) parallelism. First, Porygon separates the storage and consensus of transactions as the stateless blockchain, achieving the storage-consensus parallelism. This first-dimensional parallelism divides the processing of transactions into several stages and scales the network by supporting more nodes in the system. Based on such a design, we then propose a pipeline mechanism to achieve second-dimensional inter-block parallelism, where relevant stages of processing transactions are pipelined efficiently, thereby reducing transaction latency. Finally, Porygon presents a sharding mechanism to achieve third-dimensional inner-block parallelism. By sharding the executions of transactions of a block and adopting a lightweight cross-shard coordination mechanism, Porygon can effectively execute both intra-shard and cross-shard transactions, consequently achieving outstanding transaction throughput. We evaluate the performance of Porygon by extensive experiments on an implemented prototype and large-scale simulations. Compared with existing blockchains, Porygon boosts throughput by up to 20x, reduces network usage by more than 50x, and simultaneously requires only 5MB of storage consumption per node.

By integrating the merits of aerial, terrestrial, and satellite communications, the space-air-ground integrated network (SAGIN) is an emerging solution that can provide massive access, seamless coverage, and reliable transmissions for global-range applications. In SAGINs, the uplink connectivity from ground users (GUs) to the satellite is essential because it ensures global-range data collections and interactions, thereby paving the technical foundation for practical implementations of SAGINs. In this article, we aim to establish an accurate analytical model for the uplink connectivity of SAGINs in consideration of the global distributions of both GUs and aerial vehicles (AVs). Particularly, we investigate the uplink path connectivity of SAGINs, which refers to the probability of establishing the end-to-end path from GUs to the satellite with or without AV relays. However, such an investigation on SAGINs is challenging because all GUs and AVs are approximately distributed on a spherical surface (instead of the horizontal surface), resulting in the complexity of network modeling. To address this challenge, this paper presents a new analytical approach based on spherical stochastic geometry. Based on this approach, we derive the analytical expression of the path connectivity in SAGINs. Extensive simulations confirm the accuracy of the analytical model.

Being the largest Initial Coin Offering project, EOSIO has attracted great interest in cryptocurrency markets. Despite its popularity and prosperity (e.g., 26,311,585,008 token transactions occurred from June 8, 2018 to Aug. 5, 2020), there is almost no work investigating the EOSIO token ecosystem. To fill this gap, we are the first to conduct a systematic investigation of the EOSIO token ecosystem by conducting a comprehensive graph analysis of the entire on-chain EOSIO data (nearly 135 million blocks). We construct token-creator graphs, token-contract creator graphs, token-holder graphs, and token-transfer graphs to characterize token creators, holders, and transfer activities. Through graph analysis, we have obtained many insightful findings and observed some abnormal trading patterns. Moreover, we propose a fake-token detection algorithm to identify tokens generated by fake users or fake transactions and analyze their corresponding manipulation behaviors. Evaluation results also demonstrate the effectiveness of our algorithm. The EOSIO dataset is available at https://xblock.pro/#/dataset/15.

Automated classification of breast cancer subtypes from digital pathology images has been an extremely challenging task due to the complicated spatial patterns of cells in the tissue micro-environment. While newly proposed graph transformers are able to capture more long-range dependencies to enhance accuracy, they largely ignore the topological connectivity between graph nodes, which is nevertheless critical to extract more representative features to address this difficult task. In this paper, we propose a novel connectivity-aware graph transformer (CGT) for phenotyping the topology connectivity of the tissue graph constructed from digital pathology images for breast cancer classification. Our CGT seamlessly integrates connectivity embedding to node feature at every graph transformer layer by using local connectivity aggregation, in order to yield more comprehensive graph representations to distinguish different breast cancer subtypes. In light of the realistic intercellular communication mode, we then encode the spatial distance between two arbitrary nodes as connectivity bias in self-attention calculation, thereby allowing the CGT to distinctively harness the connectivity embedding based on the distance of two nodes. We extensively evaluate the proposed CGT on a large cohort of breast carcinoma digital pathology images stained by Haematoxylin & Eosin. Experimental results demonstrate the effectiveness of our CGT, which outperforms state-of-the-art methods by a large margin. Codes are released on https://github.com/wang-kang-6/CGT.

Code commenting plays an important role in program comprehension. Automatic comment generation helps improve software maintenance efficiency. The code comments to annotate a method mainly include header comments and snippet comments. The header comment aims to describe the functionality of the entire method, thereby providing a general comment at the beginning of the method. The snippet comment appears at multiple code segments in the body of a method, where a code segment is called a code snippet. Both of them help developers quickly understand code semantics, thereby improving code readability and code maintainability. However, existing automatic comment generation models mainly focus more on header comments because there are public datasets to validate the performance. By contrast, it is challenging to collect datasets for snippet comments because it is difficult to determine their scope. Even worse, code snippets are often too short to capture complete syntax and semantic information. To address this challenge, we propose a novel Snippet Comment Generation approach called SCGen. First, we utilize the context of the code snippet to expand the syntax and semantic information. Specifically, 600,243 snippet code-comment pairs are collected from 959 Java projects. Then, we capture variables from code snippets and extract variable-related statements from the context. After that, we devise an algorithm to parse and traverse abstract syntax tree (AST) information of code snippets and corresponding context. Finally, SCGen generates snippet comments after inputting the source code snippet and corresponding AST information into a sequence-to-sequence-based model. We conducted extensive experiments on the dataset we collected to evaluate our SCGen. Our approach obtains 18.23 in BLEU-4 metrics, 18.83 in METEOR, and 23.65 in ROUGE-L, which outperforms state-of-the-art comment generation models.

In wireless content caching networks (WCCNs), a user's content consumption crucially depends on the assortment offered. Here, the assortment refers to the recommendation list. An appropriate user choice model is essential for greater revenue. Therefore, in this paper, we propose a practical multinomial logit choice model to capture users' content requests. Based on this model, we first derive the individual demand distribution per user and then investigate the effect of the interplay between the assortment decision and cache planning on WCCNs' achievable revenue. A revenue maximization problem is formulated while incorporating the influences of the screen size constraints of users and the cache capacity budget of the base station (BS). The formulated optimization problem is a non-convex integer programming problem. For ease of analysis, we decompose it into two folds, i.e., the personalized assortment decision problem and the cache planning problem. By using structure-oriented geometric properties, we design an iterative algorithm with examinable quadratic time complexity to solve the non-convex assortment problem in an optimal manner. The cache planning problem is proved to be a 0-1 Knapsack problem and thus can be addressed by a dynamic programming approach with pseudo-polynomial time complexity. Afterwards, an alternating optimization method is used to optimize the two types of variables until convergence. It is shown by simulations that the proposed scheme outperforms various existing benchmark schemes.

Terrestrial-satellite networks (TSNs) can provide worldwide users with ubiquitous and seamless network services. Meanwhile, malicious eavesdropping is posing tremendous challenges on secure transmissions of TSNs due to their widescale wireless coverage. In this paper, we propose an aerial bridge scheme to establish secure tunnels for legitimate transmissions in TSNs. With the assistance of unmanned aerial vehicles (UAVs), massive transmission links in TSNs can be secured without impacts on legitimate communications. Owing to the stereo position of UAVs and the directivity of directional antennas, the constructed secure tunnel can significantly relieve confidential information leakage, resulting in the precaution of wiretapping. Moreover, we establish a theoretical model to evaluate the effectiveness of the aerial bridge scheme compared with the ground relay, non-protection, and UAV jammer schemes. Furthermore, we conduct extensive simulations to verify the accuracy of theoretical analysis and present useful insights into the practical deployment by revealing the relationship between the performance and other parameters, such as the antenna beamwidth, flight height and density of UAVs.

For the complicated input-output systems with nonlinearity and stochasticity, Deep State Space Models (SSMs) are effective for identifying systems in the latent state space, which are of great significance for representation, forecasting, and planning in online scenarios. However, most SSMs are designed for discrete-time sequences and inapplicable when the observations are irregular in time. To solve the problem, we propose a novel continuous-time SSM named Ordinary Differential Equation Recurrent State Space Model (ODE-RSSM). ODE-RSSM incorporates an ordinary differential equation (ODE) network (ODE-Net) to model the continuous-time evolution of latent states between adjacent time points. Inspired from the equivalent linear transformation on integration limits, we propose an efficient reparameterization method for solving batched ODEs with non-uniform time spans in parallel for efficiently training the ODE-RSSM with irregularly sampled sequences. We also conduct extensive experiments to evaluate the proposed ODE-RSSM and the baselines on three input-output datasets, one of which is a rollout of a private industrial dataset with strong long-term delay and uncertainty. The results demonstrate that the ODE-RSSM achieves better performance than other baselines in open loop prediction even if the time spans of predicted points are uneven and the distribution of length is changeable.

As computer programs run on top of blockchain, smart contracts have proliferated a myriad of decentralized applications while bringing security vulnerabilities, which may cause huge financial losses. Thus, it is crucial and urgent to detect the vulnerabilities of smart contracts. However, existing fuzzers for smart contracts are still inefficient to detect sophisticated vulnerabilities that require specific vulnerable transaction sequences to trigger. To address this challenge, we propose a novel vulnerability-guided fuzzer based on reinforcement learning, namely RLF, for generating vulnerable transaction sequences to detect such sophisticated vulnerabilities in smart contracts. In particular, we firstly model the process of fuzzing smart contracts as a Markov decision process to construct our reinforcement learning framework. We then creatively design an appropriate reward with consideration of both vulnerability and code coverage so that it can effectively guide our fuzzer to generate specific transaction sequences to reveal vulnerabilities, especially for the vulnerabilities related to multiple functions. We conduct extensive experiments to evaluate RLF's performance. The experimental results demonstrate that our RLF outperforms state-of-the-art vulnerability-detection tools (e.g., detecting 8%-69% more vulnerabilities within 30 minutes). The open-source RLF is available at https://github.com/Demonhero0/rlf

Payment channel networks (PCNs) are considered as a prominent solution for scaling blockchain, where users can establish payment channels and complete transactions in an off-chain manner. However, it is non-trivial to schedule transactions in PCNs and most existing routing algorithms suffer from the following challenges: 1) one-shot optimization, 2) privacy-invasive channel probing, 3) vulnerability to DoS attacks. To address these challenges, we propose a privacy-aware transaction scheduling algorithm with defence against DoS attacks based on deep reinforcement learning (DRL), namely PTRD. Specifically, considering both the privacy preservation and long-term throughput into the optimization criteria, we formulate the transaction-scheduling problem as a Constrained Markov Decision Process. We then design PTRD, which extends off-the-shelf DRL algorithms to constrained optimization with an additional cost critic-network and an adaptive Lagrangian multiplier. Moreover, considering the distribution nature of PCNs, in which each user schedules transactions independently, we develop a distributed training framework to collect the knowledge learned by each agent so as to enhance learning effectiveness. With the customized network design and the distributed training framework, PTRD achieves a good balance between the optimization of the throughput and the minimization of privacy risks. Evaluations show that PTRD outperforms the state-of-the-art PCN routing algorithms by 2.7%–62.5% in terms of the long-term throughput while satisfying privacy constraints.

Sharding has been considered as a prominent approach to enhance the limited performance of blockchain. However, most sharding systems leverage a non-cooperative design, which lowers the fault tolerance resilience due to the decreased mining power as the consensus execution is limited to each separated shard. To this end, we present Benzene, a novel sharding system that enhances the performance by cooperation-based sharding while defending the per-shard security. Firstly, we establish a double-chain architecture for function decoupling. This architecture separates transaction-recording functions from consensus-execution functions, thereby enabling the cross-shard cooperation during consensus execution while preserving the concurrency nature of sharding. Secondly, we design a cross-shard block verification mechanism leveraging Trusted Execution Environment (TEE), via which miners can verify blocks from other shards during the cooperation process with the minimized overheads. Finally, we design a voting-based consensus protocol for cross-shard cooperation. Transactions in each shard are confirmed by all shards that simultaneously cast votes, consequently achieving an enhanced fault tolerance and lowering the confirmation latency. We implement Benzene and conduct both prototype experiments and large-scale simulations to evaluate the performance of Benzene. Results show that Benzene achieves superior performance than existing sharding/non-sharding blockchain protocols. In particular, Benzene achieves a linearly-improved throughput with the increased number of shards (e.g., 32,370 transactions per second with 50 shards) and maintains a lower confirmation latency than Bitcoin (with more than 50 shards). Meanwhile, Benzene maintains a fixed fault tolerance at 1/3 even with the increased number of shards.

Graph auto-encoder is considered a framework for unsupervised learning on graph-structured data by representing graphs in a low dimensional space. It has been proved very powerful for graph analytics. In the real world, complex relationships in various entities can be represented by heterogeneous graphs that contain more abundant semantic information than homogeneous graphs. In general, graph auto-encoders based on homogeneous graphs are not applicable to heterogeneous graphs. In addition, little work has been done to evaluate the effect of different semantics on node embedding in heterogeneous graphs for unsupervised graph representation learning. In this work, we propose a novel Heterogeneous Graph Attention Auto-Encoders (HGATE) for unsupervised representation learning on heterogeneous graph-structured data. Based on the consideration of semantic information, our architecture of HGATE reconstructs not only the edges of the heterogeneous graph but also node attributes, through stacked encoder/decoder layers. Hierarchical attention is used to learn the relevance between a node and its meta-path based neighbors, and the relevance among different meta-paths.HGATE is applicable to transductive learning as well as inductive learning. Node classification and link prediction experiments on real-world heterogeneous graph datasets demonstrate the effectiveness of HGATE for both transductive and inductive tasks. https://github.com/fantasy-sxy/HGATE

Mobile crowdsensing (MCS) can promote data acquisition and sharing among mobile devices. Traditional MCS platforms are based on a triangular structure consisting of three roles: data requester, worker (i.e., sensory data provider) and MCS platform. However, this centralized architecture suffers from poor reliability and difficulties in guaranteeing data quality and privacy, even provides unfair incentives for users. In this paper, we propose a blockchain-based MCS platform, namely BlockSense, to replace the traditional triangular architecture of MCS models by a decentralized paradigm. To achieve the goal of trustworthiness of BlockSense, we present a novel consensus protocol, namely Proof-of-Data (PoD), which leverages miners to conduct useful data quality validation work instead of “useless” hash calculation. Meanwhile, in order to preserve the privacy of the sensory data, we design a homomorphic data perturbation scheme, through which miners can verify data quality without knowing the contents of the data. We have implemented a prototype of BlockSense and conducted case studies on campus, collecting over 7,000 data from workers’ mobile phones. Both simulations and real-world experiments show that BlockSense can not only improve system security, preserve data privacy and guarantee incentives fairness, but also achieve at least 5.6x faster than Ethereum smart contracts in verification efficiency. BlockSense is available at https://github.com/imtypist/BlockSense.

Blockchain technology has gained popularity owing to the success of cryptocurrencies such as Bitcoin and Ethereum. Nonetheless, the scalability challenge largely limits its applications in many real-world scenarios. Off-chain payment channel networks (PCNs) have recently emerged as a promising solution by conducting payments through off-chain channels. However, the throughput of current PCNs does not yet meet the growing demands of large-scale systems because: 1) most PCN systems only focus on maximizing the instantaneous throughput while failing to consider network dynamics in a long-term perspective; 2) transactions are re-actively routed in PCNs, in which intermediate nodes only passively forward every incoming transaction. These limitations of existing PCNs inevitably lead to channel imbalance and the failure of routing subsequent transactions. To address these challenges, we propose a novel proactive look-ahead algorithm (PLAC) that controls transaction flows from a long-term perspective and proactively prevents channel imbalance. In particular, we first conduct a measurement study on two real-world PCNs to explore their characteristics in terms of transaction distribution and topology. On that basis, we propose PLAC based on deep reinforcement learning (DRL), which directly learns the system dynamics from historical interactions of PCNs and aims at maximizing the long-term throughput. Furthermore, we develop a novel graph convolutional network-based model for PLAC, which extracts the inter-dependency between PCN nodes to consequently boost the performance. Extensive evaluations on real-world datasets show that PLAC improves state-of-the-art PCN routing schemes w.r.t the long-term throughput from 6.6% to 34.9%.

Multi-view clustering (MVC) aims at exploiting the consistent features within different views to divide samples into different clusters. Existing subspace-based MVC algorithms usually assume linear subspace structures and two-stage similarity matrix construction strategies, thereby posing challenges in imprecise low-dimensional subspace representation and inadequacy of exploring consistency. This paper presents a novel hierarchical representation for MVC method via the integration of intra-sample, intra-view, and inter-view representation learning models. In particular, we first adopt the deep autoencoder to adaptively map the original high-dimensional data into the latent low-dimensional representation of each sample. Second, we use the self-expression of the latent representation to explore the global similarity between samples of each view and obtain the subspace representation coefficients. Third, we construct the third-order tensor by arranging multiple subspace representation matrices and impose the tensor low-rank constraint to sufficiently explore the consistency among views. Being incorporated into a unified framework, these three models boost each other to achieve a satisfactory clustering result. Moreover, an alternating direction method of multipliers algorithm is developed to solve the challenging optimization problem. Extensive experiments on both simulated and real-world multi-view datasets show the superiority of the proposed method over eight state-of-the-art baselines.

Tensor analysis has received widespread attention in high-dimensional data learning. Unfortunately, the tensor data are often accompanied by arbitrary signal corruptions, including missing entries and sparse noise. How to recover the characteristics of the corrupted tensor data and make it compatible with the downstream clustering task remains a challenging problem. In this article, we study a generalized transformed tensor low-rank representation (TTLRR) model for simultaneously recovering and clustering the corrupted tensor data. The core idea is to find the latent low-rank tensor structure from the corrupted measurements using the transformed tensor singular value decomposition (SVD). Theoretically, we prove that TTLRR can recover the clean tensor data with a high probability guarantee under mild conditions. Furthermore, by using the transform adaptively learning from the data itself, the proposed TTLRR model can approximately represent and exploit the intrinsic subspace and seek out the cluster structure of the tensor data precisely. An effective algorithm is designed to solve the proposed model under the alternating direction method of multipliers (ADMMs) algorithm framework. The effectiveness and superiority of the proposed method against the compared methods are showcased over different tasks, including video/face data recovery and face/object/scene data clustering.

This article studies the PBFT-based sharded permissioned blockchain, which executes in either a local datacenter or a rented cloud platform. In such permissioned blockchain, the transaction (TX) assignment strategy could be malicious such that the network shards may possibly receive imbalanced transactions or even bursty-TX injection attacks. An imbalanced transaction assignment brings serious threats to the stability of the sharded blockchain. A stable sharded blockchain can ensure that each shard processes the arrived transactions timely. Since the system stability is closely related to the blockchain throughput, how to maintain a stable sharded blockchain becomes a challenge. To depict the transaction processing in each network shard, we adopt the Lyapunov Optimization framework. Exploiting drift-plus-penalty (DPP) technique, we then propose an adaptive resource-allocation algorithm, which can yield the near-optimal solution for each network shard while the shard queues can also be stably maintained. We also rigorously analyze the theoretical boundaries of both the system objective and the queue length of shards. The numerical results show that the proposed algorithm can achieve a better balance between resource consumption and queue stability than other baselines. We particularly evaluate two representative cases of bursty-TX injection attacks, i.e., the continued attacks against all network shards and the drastic attacks against a single network shard. The evaluation results show that the DPP-based algorithm can well alleviate the imbalanced TX assignment, and simultaneously maintain high throughput while consuming fewer resources than other baselines.

The emergence of infectious disease COVID-19 has challenged and changed the world in an unprecedented manner. The integration of wireless networks with edge computing (namely wireless edge networks) brings opportunities to address this crisis. In this paper, we aim to investigate the prediction of the infectious probability and propose precautionary measures against COVID-19 with the assistance of wireless edge networks. Due to the availability of the recorded detention time and the density of individuals within a wireless edge network, we propose a stochastic geometry-based method to analyze the infectious probability of individuals. The proposed method can well keep the privacy of individuals in the system since it does not require to know the location or trajectory of each individual. Moreover, we also consider three types of mobility models and the static model of individuals. Numerical results show that analytical results well match with simulation results, thereby validating the accuracy of the proposed model. Moreover, numerical results also offer many insightful implications. Thereafter, we also offer a number of countermeasures against the spread of COVID-19 based on wireless edge networks. This study lays the foundation toward predicting the infectious risk in realistic environment and points out directions in mitigating the spread of infectious diseases with the aid of wireless edge networks.

Network slicing has been widely agreed as a promising technique to accommodate diverse services for the Industrial Internet of Things (IIoT). Smart transportation, smart energy, and smart factory/manufacturing are the three key services to form the backbone of IIoT. Network slicing management is of paramount importance in the face of IIoT services with diversified requirements. It is important to have a comprehensive survey on intelligent network slicing management to provide guidance for future research in this field. In this paper, we provide a thorough investigation and analysis of network slicing management in its general use cases as well as specific IIoT services including smart transportation, smart energy and smart factory, and highlight the advantages and drawbacks across many existing works/surveys and this current survey in terms of a set of important criteria. In addition, we present an architecture for intelligent network slicing management for IIoT focusing on the above three IIoT services. For each service, we provide a detailed analysis of the application requirements and network slicing architecture, as well as the associated enabling technologies. Further, we present a deep understanding of network slicing orchestration and management for each service, in terms of orchestration architecture, AI-assisted management and operation, edge computing empowered network slicing, reliability, and security. For the presented architecture for intelligent network slicing management and its application in each IIoT service, we identify the corresponding key challenges and open issues that can guide future research. To facilitate the understanding of the implementation, we provide a case study of the intelligent network slicing management for integrated smart transportation, smart energy, and smart factory. Some lessons learnt include: 1) For smart transportation, it is necessary to explicitly identify service function chains (SFCs) for specific applications along with the orchestration of underlying VNFs/PNFs for supporting such SFCs; 2) For smart energy, it is crucial to guarantee both ultra-low latency and extremely high reliability; 3) For smart factory, resource management across heterogeneous network domains is of paramount importance. We hope that this survey is useful for both researchers and engineers on the innovation and deployment of intelligent network slicing management for IIoT.

The traditional production paradigm of large batch production does not offer flexibility toward satisfying the requirements of individual customers. A new generation of smart factories is expected to support new multivariety and small-batch customized production modes. For this, artificial intelligence (AI) is enabling higher value-added manufacturing by accelerating the integration of manufacturing and information communication technologies, including computing, communication, and control. The characteristics of a customized smart factory are: self-perception, operations optimization, dynamic reconfiguration, and intelligent decision-making. The AI technologies will allow manufacturing systems to perceive the environment, adapt to the external needs, and extract the process knowledge, including business models, such as intelligent production, networked collaboration, and extended service models. This article focuses on the implementation of AI in customized manufacturing (CM). The architecture of an AI-driven customized smart factory is presented. Details of intelligent manufacturing devices, intelligent information interaction, and construction of a flexible manufacturing line are showcased. The state-of-the-art AI technologies of potential use in CM, that is, machine learning, multiagent systems, Internet of Things, big data, and cloud-edge computing, are surveyed. The AI-enabled technologies in a customized smart factory are validated with a case study of customized packaging. The experimental results have demonstrated that the AI-assisted CM offers the possibility of higher production flexibility and efficiency. Challenges and solutions related to AI in CM are also discussed.

The incumbent Internet of Things suffers from poor scalability and elasticity exhibiting in communication, computing, caching and control (4Cs) problems. The recent advances in deep reinforcement learning (DRL) algorithms can potentially address the above problems of IoT systems. In this context, this paper provides a comprehensive survey that overviews DRL algorithms and discusses DRL-enabled IoT applications. In particular, we first briefly review the state-of-the-art DRL algorithms and present a comprehensive analysis on their advantages and challenges. We then discuss on applying DRL algorithms to a wide variety of IoT applications including smart grid, intelligent transportation systems, industrial IoT applications, mobile crowdsensing, and blockchain-empowered IoT. Meanwhile, the discussion of each IoT application domain is accompanied by an in-depth summary and comparison of DRL algorithms. Moreover, we highlight emerging challenges and outline future research directions in driving the further success of DRL in IoT applications.

The wide proliferation of various wireless communication systems and wireless devices has led to the arrival of big data era in large-scale wireless networks. Big data of large-scale wireless networks has the key features of wide variety, high volume, real-time velocity, and huge value leading to the unique research challenges that are different from existing computing systems. In this article, we present a survey of the state-of-art big data analytics (BDA) approaches for large-scale wireless networks. In particular, we categorize the life cycle of BDA into four consecutive stages: Data Acquisition, Data Preprocessing, Data Storage, and Data Analytics. We then present a detailed survey of the technical solutions to the challenges in BDA for large-scale wireless networks according to each stage in the life cycle of BDA. Moreover, we discuss the open research issues and outline the future directions in this promising area.

Data analytics in massive manufacturing data can extract huge business values while can also result in research challenges due to the heterogeneous data types, enormous volume and real-time velocity of manufacturing data. This paper provides an overview on big data analytics in manufacturing Internet of Things (MIoT). This paper first starts with a discussion on necessities and challenges of big data analytics in manufacturing data of MIoT. Then, the enabling technologies of big data analytics of manufacturing data are surveyed and discussed. Moreover, this paper also outlines the future directions in this promising area.

We have experienced the proliferation of diverse blockchain platforms, including cryptocurrencies as well as private blockchains. In this chapter, we present an overview of blockchain intelligence. We first briefly review blockchain and smart contract technologies. We then introduce blockchain intelligence, which is essentially an amalgamation of blockchain and artificial intelligence. In particular, we discuss the opportunities of blockchain intelligence to address the limitations of blockchain and smart contracts.

Henry Hong-Ning Dai has been teaching the following courses since he joined the department of computer and science at Hong Kong Baptist University. Before joining HKBU, he also taught many CS-related courses at Lingnan University (LNU), Hong Kong (from 2021 to 2022) and Macau University of Science and Technology (MUST), Macau (from 2010 to 2021).

To introduce the fundamental issues of big data management; To learn the latest techniques of data management and processing; To conduct application case studies to show how data management techniques support large-scale data processing.

To introduce the organization of digital computers, the different components and their basic principles and operations.

Dr. Henry Dai is recruiting self-motivated Ph.D./RA/Post-doc with a strong background in computer science, electronic engineering, or applied mathematics, working in the fields including (but not limited to) blockchain, the Internet of Things, and big data analytics. If you are interested in joining Henry's group, please feel free to send him an email with your CV, transcripts (undergraduate and postgraduate), and publications (if any). Please read Henry's research areas and recent publications before sending your emails.

Mail Address:

Room 643, David C. Lam Building

Hong Kong Baptist University

55 Renfrew Road, Kowloon Tong, Hong Kong